The Blackbird.AI RAV3N Blog Roundup: Global Events That Defined Narrative Attacks on Organizations in 2023

From bots and climate change to cyberattacks and conspiracies: Chronicling the impact of narrative attacks that caused financial and reputational harm in 2023.

Posted by Blackbird.AI’s RAV3N Narrative Intelligence and Research Team on December 27, 2023

In 2023, the landscape of narrative attacks evolved dramatically, presenting new challenges and highlighting key events that reshaped our understanding of digital misinformation. From geopolitical conflicts to the implications of advanced AI technologies, this blog post, written by Blackbird.AI’s RAV3N Narrative Intelligence and Research Team, delves into the most significant narrative attacks of the year, offering insights into their impact on global events and public perception. It also analyzes global events, emphasizing the need for innovative technologies to combat the complex and evolving landscape of narrative attacks.

From Deep Fakes to Global Stakes: The 2023 Narrative Attack Review

Blackbird.AI’s RAV3N Narrative Intelligence and Research Team examines the surge of sophisticated narrative attacks swirling around global events, including the Israel-Hamas conflict and the Russia-Ukraine war, and discusses the rise of generative AI in creating deep fakes and spreading disinformation. The post underlines the critical need for innovative solutions to navigate the complex web of digital disinformation and safeguard information integrity.

Narrative Attacks: A New Threat Vector for Security Leaders

Blackbird.AI CMO Dan Lowden and Chief Strategy and Innovation Officer Dr. John Wissinger discuss the emerging threat of narrative attacks in cybersecurity, highlighting their impact on organizations. It emphasizes the importance of understanding and addressing these attacks involving misinformation and disinformation to protect companies from financial, operational, and reputational harm. The article underlines the significant role of narrative intelligence platforms in providing visibility and protection against these evolving cyber risks.

Narrative Attacks: A Top Concern for Companies and Communication Leaders

Blackbird.AI CMO Dan Lowden and Chief Strategy and Innovation Officer Dr. John Wissinger address the growing concern of narrative attacks in corporate communications. Fueled by misinformation and disinformation, these attacks pose significant reputational and financial risks to organizations. The post emphasizes the universal impact of these attacks across industries and the urgent need for companies to understand and counteract harmful narratives. It underscores the role of narrative intelligence platforms in providing visibility and protection against these threats, highlighting their importance in crisis management and brand protection.

Inside the Game Saga: A lesson in narrative attacks and market manipulation

Beatrice Titus analyzes the various narratives and public reactions to the GameStop stock trading frenzy in January 2021. It highlights how actions by Robinhood and other brokers to restrict trading led to a surge in conspiracy theories and negative sentiment online. The post dissects key narratives, including accusations of market manipulation, elitist biases in trading, and the notion of a compromised ‘free market,’ showcasing the significant impact of such events on financial institutions and public trust in the market.

When Insider Threats Backfire: The Case of Jareh Dalke, Russia, and the NSA

Rennie Westcott, Beatrice Titu, and Anne Griffin examine the case of Jareh Dalke, who attempted to sell classified information to someone he believed was a Russian agent but was actually an undercover FBI agent. Dalke’s actions, driven by financial desperation and ideological beliefs, sparked various online narratives, ranging from calls for harsher punishment to discussions on poverty and student debt. The case highlights the complex challenges of insider threats and the impact of such incidents on public trust and perception.

Blackbird.AI CEO Wasim Khaled deconstructs the implications of a new executive order by President Biden on AI safety and ethics. It compares the initial resistance to AI regulations to the early skepticism about seatbelt laws, emphasizing the necessity of such measures for safe and ethical AI development. The post highlights the balancing act between fostering AI innovation and implementing safeguards against risks like bias, misuse, and narrative attacks. It stresses the importance of global cooperation, robust auditing mechanisms, and continuous evaluation for maintaining AI integrity and public trust.

Misleading Narratives Rippled Across the Internet After Silicon Valley Bank’s Collapse

Daniel Gonzalez dives into the spread of various misleading narratives following the collapse of Silicon Valley Bank. These narratives included claims of a politically motivated bank run, debates about taxpayer bailouts, alleged ties between California Governor Gavin Newsom and the bank, insider stock sales by the bank’s executives, the bank’s failure attributed to its support for progressive causes, and conspiracy theories involving Jeffrey Epstein. The post highlights how these narratives can significantly affect organizations’ reputations and emphasizes the importance of narrative intelligence in managing such crises.

Sony Faces Reputational Damage After Cyberattacks

Beatrice Titus and Thomas Hynes analyze the reputational impact on Sony following a series of cyberattacks. It highlights various online narratives that emerged, including concerns over personal data safety, frustration with Sony’s security measures, anger over price hikes amid poor security, speculation about the CEO’s retirement, and debates over console superiority. The post emphasizes the significant damage to Sony’s reputation and trust, illustrating the broader risks cyberattacks pose to brand perception.

When Cyberattacks Hit Brands Like MGM, Reputation Hangs in the Balance

Beatrice Titus and Anne Griffin analyze the fallout of a cyberattack on MGM Resorts. It highlights how such attacks cause immediate disruption and financial losses, significantly damaging brand reputation and public trust. The analysis explores various online narratives following the attack, including operational challenges, confusion, and competitor comparisons. The post underscores companies’ need for robust cybersecurity and narrative intelligence strategies to protect against technical and reputational threats.

Conspiracy Theories Abound Ahead of Nationwide Emergency Alert System Test

Thomas Hynes and Sarah Boutboul examine the widespread conspiracy theories surrounding a FEMA Emergency Alert System test. Viral claims suggested that 5G, COVID-19 vaccines, and the EAS alert were part of a government plot, with some even asserting it would lead to a zombie apocalypse. The post highlights the public’s reaction to these theories, ranging from humor to skepticism. It emphasizes the need for critical thinking in the face of such narratives, especially given the role of social media in amplifying them.

Bridging the Narrative Attrack Gap in Cybersecurity

Blackbird.AI CEO Wasim Khaled addresses narrative threats in cybersecurity. It underlines the need for Chief Information Security Officers (CISOs) to expand their focus beyond traditional security measures to understand and mitigate narrative risks. These risks can manipulate public perception and impact brand reputation through misinformation and disinformation. The post highlights how narrative intelligence, including monitoring insider threats and public perception, is essential for a comprehensive cybersecurity strategy.

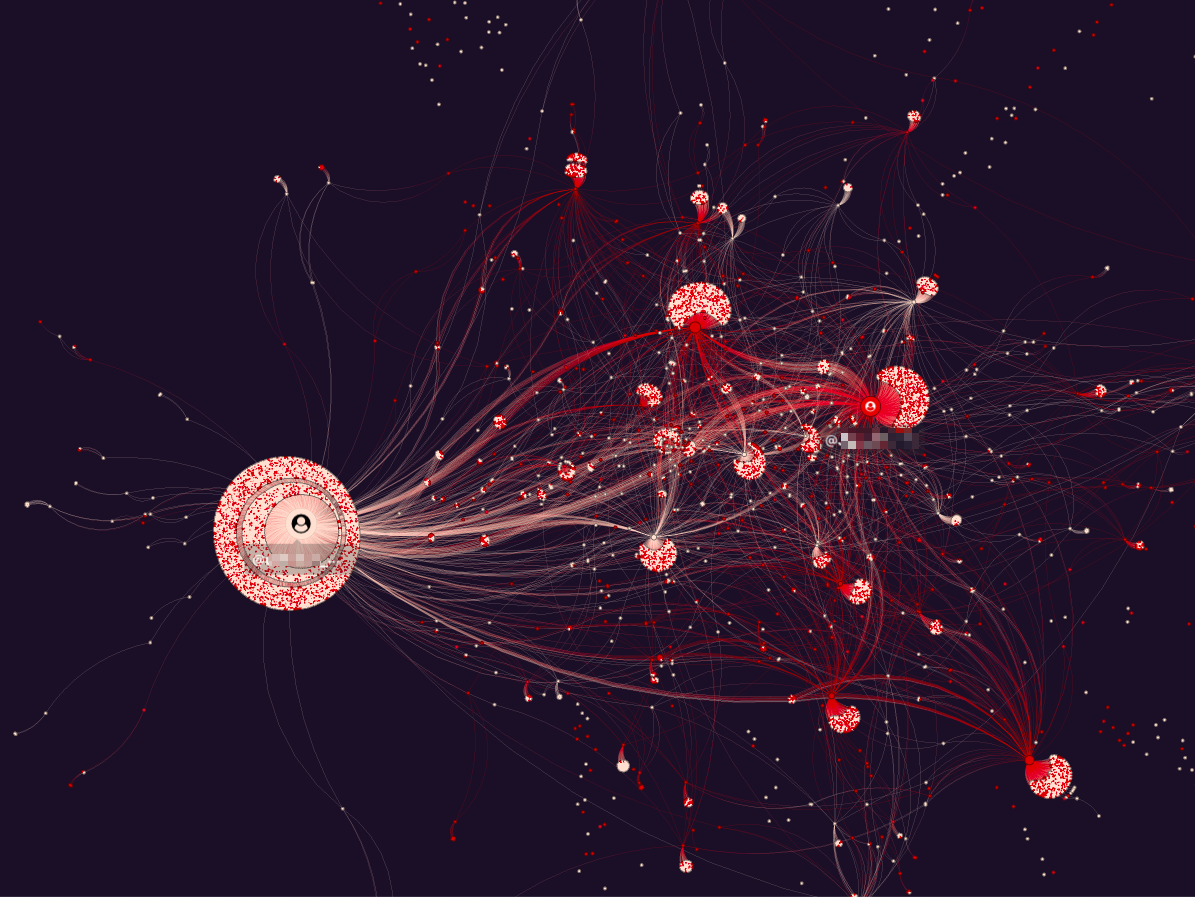

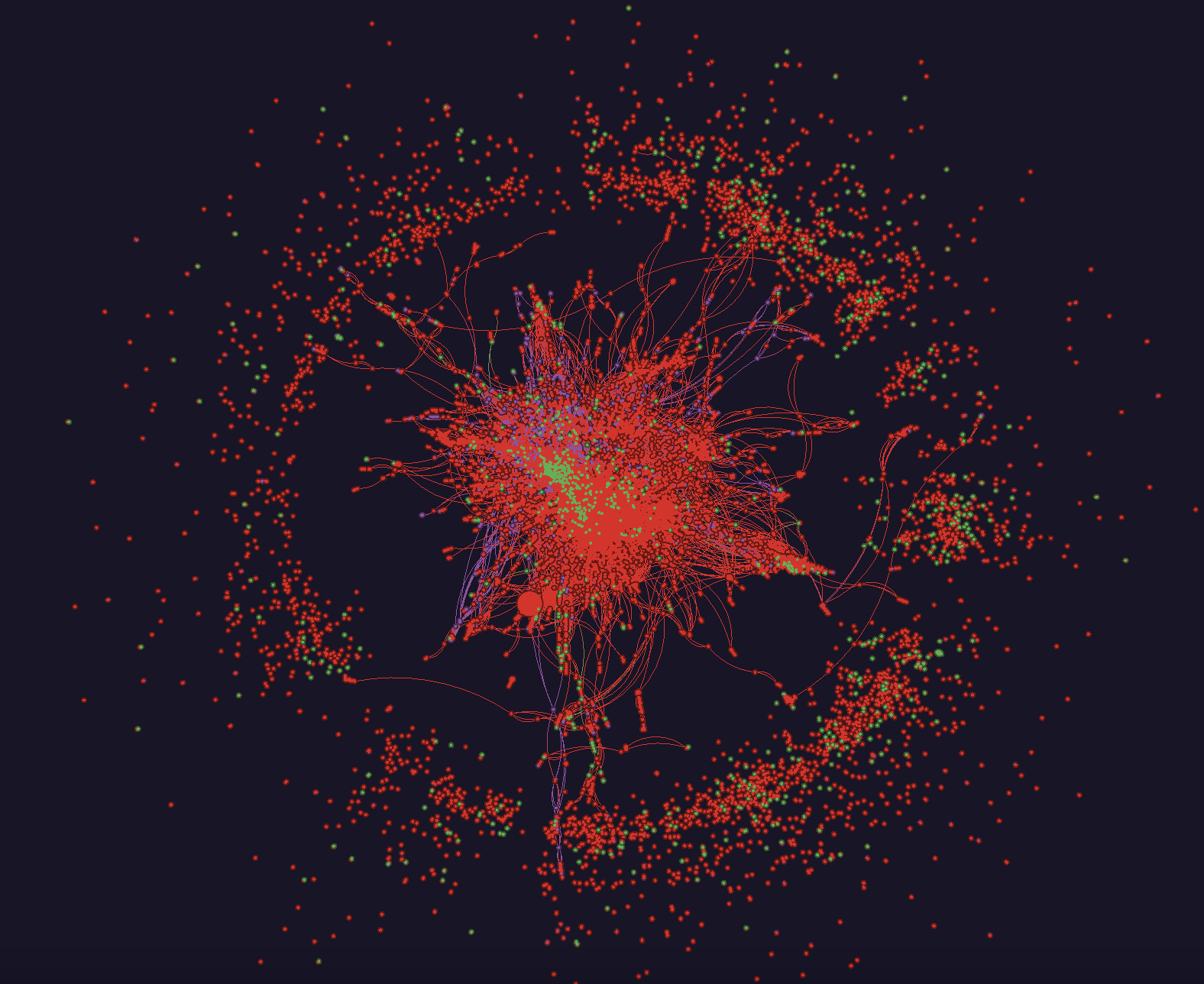

Distributed Denial of Trust: How to Know If You Have a Brand Perception Problem

Blackbird.AI CEO Wasim Khaled examines the concept of Distributed Denial of Trust (DDoT) attacks, which are sophisticated narrative attacks that use misinformation and disinformation to manipulate public perception. Like cyber DDoS attacks, these attacks flood communication networks with deceptive or controversial content, undermining public trust and damaging brands. The post stresses the need for advanced countermeasures, like narrative and risk intelligence tools, to combat these threats and preserve the integrity of online discourse.

Navigating the Warped Realities of Generative AI

Sarah Boutboul explores the rapid advancement and societal impacts of Generative AI, including deep fakes and AI-generated content. It discusses the challenges in discerning AI-created content from human-made products, emphasizing the potential for misuse in disinformation campaigns and the erosion of trust in media. The post also addresses legal and security implications, such as defamation, copyright infringement, and corporate brand risks, underscoring the urgency for effective mitigation strategies to manage these evolving digital threats.

Safeguard Your Brand’s Attack Surface Through CCO and CISO Collaboration

Blackbird.AI examines the importance of collaboration between Chief Information Security Officers (CISOs) and Chief Communications Officers (CCOs) in tackling cybersecurity and disinformation threats. It discusses how these traditionally separate roles intersect due to the evolving digital threat landscape, including ransomware and disinformation campaigns that impact brand reputation. The post advocates for a united approach between CISOs and CCOs (creating a Fusion Team of cross-disciplinary members of the organization) to effectively respond to these intertwined cyber and reputational risks, highlighting the necessity of advanced technology solutions and informed strategies to safeguard against such threats.

The Rise of Climate Denialism: How Misinformation is Impeding Renewable Energy Development in the US

Blackbird.AI’s RAV3N Narrative Intelligence and Research Team examines the increasing use of social media to spread misinformation about renewable energy, contributing to climate denialism. This misinformation often involves skewed scientific evidence and cherry-picked data driven by various actors, including special interest groups and individuals with no expertise in the field. The post also highlights the role of influential figures and social media platforms in perpetuating these falsehoods. It emphasizes the importance of verifying information sources and the risks of greenwashing in business. The article underscores the need for effective strategies to combat misinformation and protect the integrity of environmental efforts.

Roberta Duffield writes about the problem of overemphasizing bots as the primary threat in digital misinformation campaigns. She argues that human actors, due to their authentic and contextually relevant communication, are equally capable of spreading disinformation, often more effectively than bots. The post highlights cases where humans, rather than bots, have significantly impacted narrative manipulation, emphasizing the need for a holistic understanding of digital threat actors. This broader perspective is crucial for effectively guarding against information manipulation in the digital sphere.

To learn more about how Blackbird.AI can help you in these situations, contact us here.

About Blackbird.AI

BLACKBIRD.AI protects organizations from narrative attacks created by misinformation and disinformation that cause financial and reputational harm. Powered by our AI-driven proprietary technology, including the Constellation narrative intelligence platform, RAV3N Risk LMM, Narrative Feed, and our RAV3N Narrative Intelligence and Research Team, Blackbird.AI provides a disruptive shift in how organizations can protect themselves from what the World Economic Forum called the #1 global risk in 2024.